Emotion Based Music Player in Python

Introduction:

The Emotion-Based Music Player in python 3000 is a futuristic Python application that automatically plays YouTube music based on moods using AI-driven facial emotion detection. This clever system uses your facial expressions to determine your current emotional state and streams music that fits your mood in real time, eliminating the need for users to choose songs by hand in the emotion based music player in python.

Purpose & Use Case:

Although music and emotion are closely related, picking the right song can be difficult when you’re depressed, exhausted, or nervous. By providing a hands-free, emotionally responsive music experience, this project addresses that issue. It’s perfect for:

- Relaxing after a long day.

- Personal mood-based music therapy.

- Smart room integrations or tech demos.

- Users who want an intelligent music assistant.

What Makes It Special?

Gone are the days of scrolling endlessly to find that perfect song. This project offers:

Facial Emotion Recognition: Uses your webcam to analyze facial features in real time.

AI-Powered Mood Detection: Detects emotions such as Happy, Sad, Angry, Neutral, or Surprised using machine learning models (like Haar Cascade or DeepFace).

YouTube Integration: Plays emotion-specific music directly from YouTube using

pywhatkit,pafy, oryoutube_dl.Real-Time Analysis: Continuously monitors your face to adapt music as your mood changes.

Hands-Free Operation: No clicks needed—just sit back and let your expressions do the talking.

Key Technologies Used:

| Technology | Purpose |

|---|---|

| OpenCV | Captures and processes webcam video frames |

| DeepFace / FER | Detects facial expressions and classifies emotions |

| PyWhatKit / Pafy | Plays YouTube music based on emotion keywords |

| Tkinter (Optional) | Adds GUI elements like mood display, logs, and controls |

| NumPy & Pandas | Handles data structures (for logs, emotion stats, etc.) |

| Threading | Allows non-blocking music play alongside webcam stream |

Detected Emotions and Music Mapping in Emotion Based Music Player In Python

| Emotion | Music Genre / Keywords |

|---|---|

| Happy | “Upbeat pop songs”, “Feel-good hits”, “Dance mix” |

| Sad | “Soft acoustic”, “Sad piano music”, “Emotional ballads” |

| Angry | “Rock anthems”, “Heavy metal”, “Rap battles” |

| Neutral | “Lo-fi beats”, “Chill background music” |

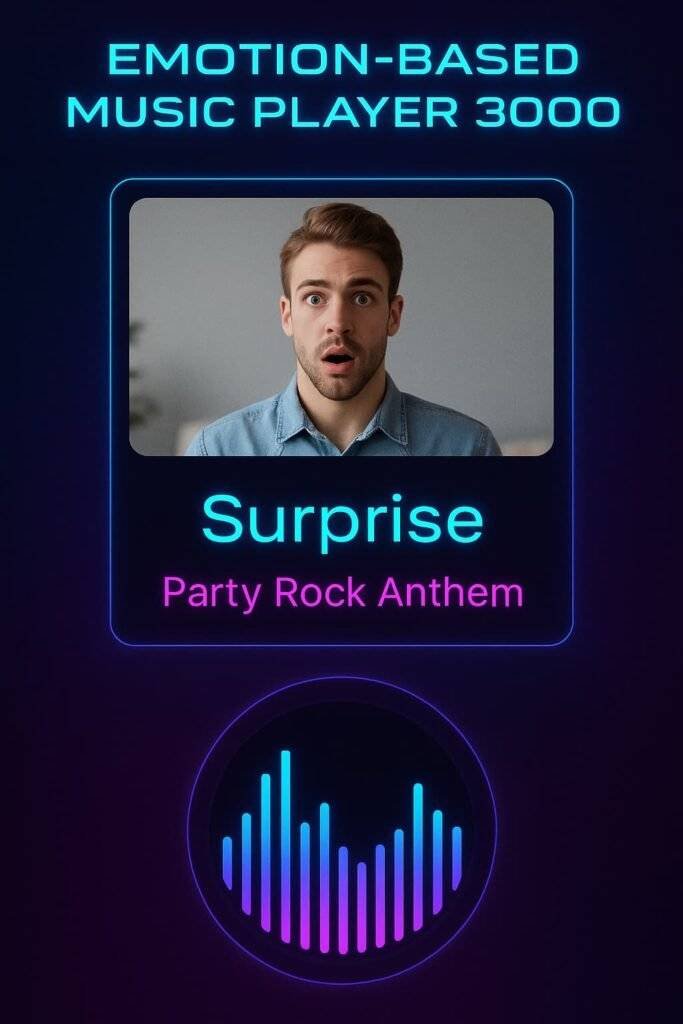

| Surprised | “Trending songs”, “Electronic pop” |

How It Works:

Capture Frame: Uses OpenCV to continuously access webcam feed.

Detect Face: Haar Cascades / CNN detects your face region.

Analyze Emotion: Extracts facial landmarks and passes them to an emotion classifier (e.g., DeepFace or a trained CNN).

Match to Mood: Maps the predicted emotion to a pre-defined set of music categories.

Stream Music: Opens YouTube in a browser tab and automatically plays a relevant track.

Repeat or Update: Re-checks emotions every few seconds to adapt to your mood in real time.

Use Cases:

Smart Personal Music Player – Adjusts songs based on your mood.

YouTubers / Streamers – Automatically picks music to match facial reactions.

Mental Health Tools – Encourages emotional regulation via music therapy.

Educational Projects – Perfect for showcasing AI + CV in tech expos or final-year projects.

Emotion-Based Music Player in Python

Let Your Emotions Control the Music – AI-Driven Personalized Soundtracks

In a world increasingly driven by artificial intelligence and personalization, Emotion-Based Music Player in Python 3000 emerges as a smart, futuristic innovation that redefines how we interact with music. This intelligent system automatically detects your facial expression using AI-powered emotion recognition and plays real-time mood-matching music—seamlessly, intuitively, and accurately.

Built with Python, OpenCV, DeepFace, and YouTube integration, it brings together the best of AI and entertainment technology into one sophisticated yet accessible package.

Project Overview

Emotion-Based Music Player 3000 is designed for:

Emotion-Aware Music Streaming

AI/Machine Learning Education Projects

Demonstrating Real-Time Computer Vision Applications

Smart Assistive Tools for Mental Wellness

Instead of choosing songs manually, users can rely on the system’s AI to interpret their emotional cues and generate a playlist that resonates with their current state of mind.

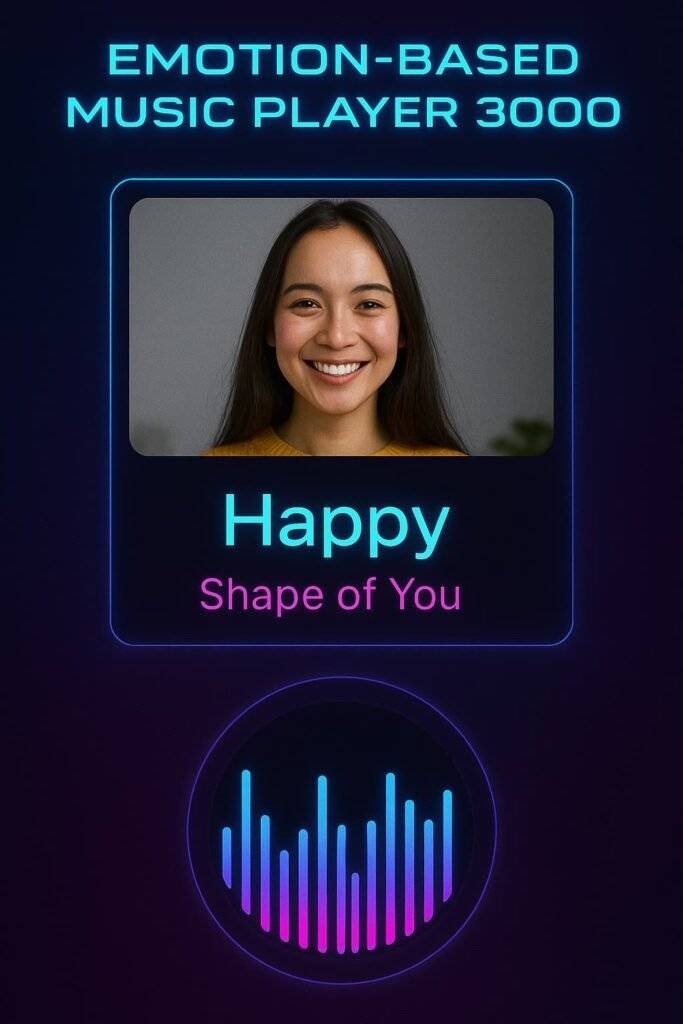

Imagine feeling sad, and the app automatically queues a comforting acoustic track… or feeling joyful, and the system switches to an upbeat dance anthem. That’s the emotional intelligence of Music Player 3000.

Why It Matters

Mental Health & Wellness: Music is a proven tool for emotional regulation. This system can subtly assist users in stress relief and mood management.

AI Awareness: Perfect for B.Tech/MCA projects, it demonstrates how emotion AI can be applied in daily life.

Next-Gen Personalization: With this project, media consumption becomes hands-free, mood-based, and intelligent.

Architecture Overview

Here’s how the system works under the hood:

1. Webcam Feed

Uses

OpenCVto capture live video feed.Frame-by-frame analysis allows constant emotion monitoring.

2. Emotion Analysis

DeepFace or

ferlibrary processes facial landmarks.Detects emotions like:

Happy

Sad

Angry

Surprised

Neutral

Fear (optional)

3. Emotion-Music Mapping

Each emotion is linked to a keyword search:

emotion_music_map = {

"happy": "happy upbeat songs",

"sad": "sad emotional tracks",

"angry": "aggressive rock music",

"neutral": "lofi chill beats",

"surprise": "trending party mix"

}

4. Playback via YouTube

Uses

pywhatkit.playonyt()orwebbrowser.open()to play mood-based music.Alternatively, can stream audio using

pafyorvlcbindings.

5. Real-Time Looping

Loop runs every few seconds to re-analyze emotions and update playback dynamically.

Tech Stack Summary

| Technology | Use |

|---|---|

| Python 3.7+ | Core programming |

| OpenCV | Webcam capture and frame processing |

| DeepFace / FER | Facial emotion recognition |

| PyWhatKit | YouTube song playback |

| Webbrowser | Alternate method for video streaming |

| Tkinter (optional) | For GUI-based version |

| Threading | Keeps video and playback non-blocking |

Real-World Applications

Mental Wellness Apps – Detect mood dips and play uplifting tracks.

Gaming Consoles – Dynamically adjust background music based on player reactions.

Educational Demonstrations – AI in action for ML/AI coursework or tech exhibitions.

Corporate Use – Automatically personalize background music in break rooms or offices.

YouTube/Streaming Assistants – Change music based on a content creator’s face on screen.

GUI Enhancement (Optional Tkinter)

Add a user interface to:

Show detected mood.

Display currently playing track.

Offer a stop/play button.

Maintain a history of mood changes.

Benefits of Using This System

| Feature | Advantage |

|---|---|

| Real-time Emotion Reading | Automatically adapts playback to live emotions |

| YouTube Integration | Access to a wide range of tracks instantly |

| Lightweight Codebase | Easily modifiable for students and developers |

| No External Playlist Required | Uses web search & YouTube API keywords |

| User-Friendly | No setup needed beyond webcam and Python |

Future Scope and Enhancements

Spotify API: Integrate Spotify playback for ad-free listening.

Train Custom Models: Build custom CNNs for emotion detection tailored to specific demographics.

Mobile Version: Port to mobile using Kivy or Flutter + Python backend.

Voice Feedback: Add a voice bot that speaks detected mood or suggests playlists.

Mood Analytics Dashboard: Show emotional trends over time with graphs.

Therapist Mode: Suggest relaxation techniques or breathing exercises.

Privacy Considerations

By default, the app does not store any video or emotion data.

However, developers can add consent-based features to:

Log mood history

Save images for accuracy analysis

Share data with therapists or researchers

Ensure any such feature is opt-in and clearly explained to end users.

Educational Value

Demonstrates practical applications of AI in daily life

Combines Computer Vision + NLP + APIs

Great for machine learning project submissions

Helps students learn about real-time emotion analysis pipelines

Final Words

The Emotion-Based Music Player in Python 3000 isn’t just a project—it’s a glimpse into the future of emotion-aware computing. Whether you’re a student looking for a showstopper project, a developer experimenting with human-AI interaction, or simply someone who loves music and technology—this tool will inspire and engage.

Steps Of Makin Emotion Based Music Player In Python

1. Face Detection: The webcam will captures your facial expression using OpenCV.

2. Emotion Analysis: DeepFace AI will detects your current mood (e.g., Happy, Sad, Angry, Neutral).

3. Music Search: Based on your emotion, it searches YouTube for suitable songs.

4. Audio Streaming: yt-dlp + VLC will stream the song directly without downloading it.

5. Visual Feedback: A modern animated visualizer will reacts to the music, which is creating an immersive effect.

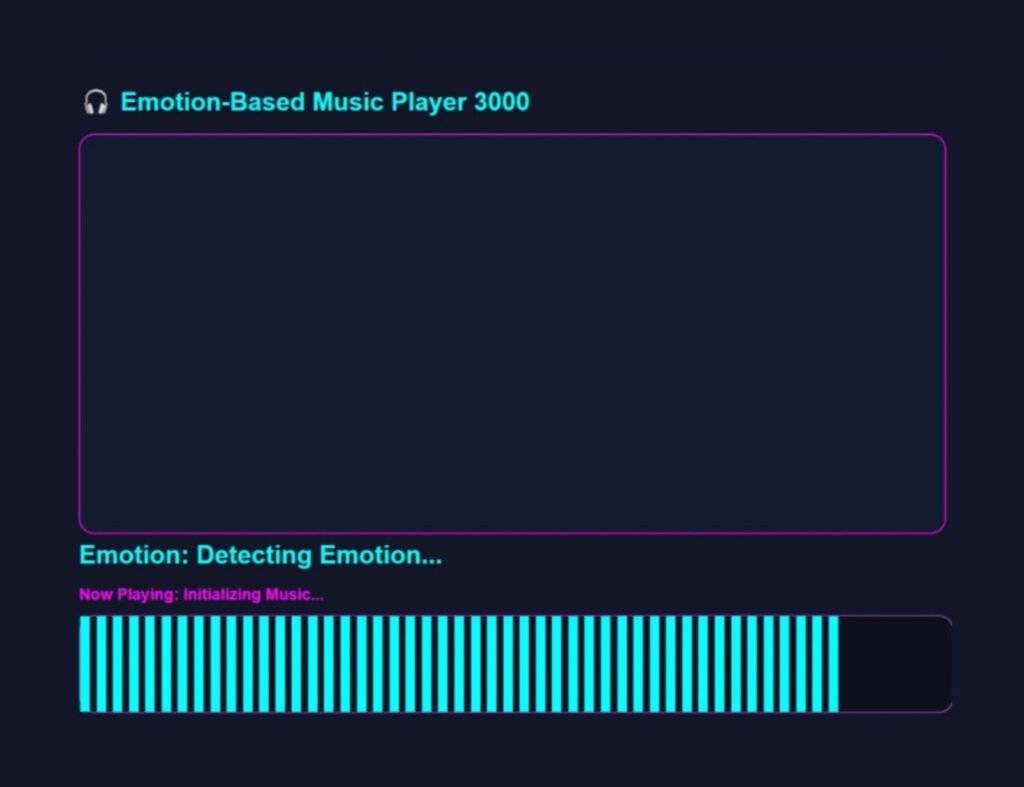

User Interface:

It is Built with CustomTkinter, the UI has a sleek dark theme with glowing labels and animated bars. It displays:

- Live webcam feed.

- Detected emotion in glowing text.

- The currently playing song title.

- A pulsating music visualizer at the bottom.

There are no buttons or manual input in it — just launch the app and let it play the right music for your mood.

Why It’s Unique:

- Real-time emotion-to-music intelligence.

- No playlists, no searches — just you and your emotions.

- Uses AI + live YouTube streaming + animated UI — all in one app.

- 100% automatic, stylish, and futuristic

Steps To Create Emotion Based Music Player In Python

Required Modules Or Packages:

1. OpenCV: It will Captures the real-time webcam video for face detection.

2. DeepFace: It will Detects the emotions from your face using deep learning models.

3. CustomTkinter: It will Creates a modern, stylish, dark-themed GUI — which is better than standard Tkinter.

4. yt-dlp: This module will Streams the YouTube audio directly without downloading full videos.

5. VLC Python Bindings: It will Plays the YouTube audio by using the VLC media backend.

6. YouTube Search Python: It will Searches the YouTube videos based on your detected emotion (no API key needed).

7. NumPy: It will Powers the animated visualizer by generating random waveform bar heights.

8. Pillow (PIL): This Package will Converts and displays the webcam frames as images inside the GUI.

To install these Packages, use the following command:

1. OpenCV:

pip install opencv-python

2. DeepFace:

pip install deepface

3. CustomTkinter:

pip install customtkinter

4. yt-dlp:

pip install yt-dlp

5. VLC Python Bindings:

pip install python-vlc

6. YouTube Search Python:

pip install youtube-search-python

7. NumPy:

pip install numpy

8. Pillow (PIL):

pip install pillow

How To Run The Code:

Method 1:

Step 1 . First , You Download and Install Visual Studio Code or VS Code In your PC or Laptop by VS Code Official Website .

Step 2 . Now Open CMD As Administrator and install the above packages using Pip .

Step 3 . Now Open Visual Studio Code .

Step 4. Now Make The file named as main.py .

Step 5 . Now Copy And Paste The Code from the Link Given Below

Step 6 . After pasting The Code , Save This & Click On Run Button .

Step 7 . Now You will See The Output .

Method 2:

Step 1 . First , You Download and Install Visual Studio Code or VS Code In your PC or Laptop by VS Code Official Website .

Step 2 . Now Open CMD As Administrator and install the above packages using Pip .

Step 3 . Now Open the link , which is provided below.

Step 4. Now download the ZIP file of the code.

Step 5 . Now Extract the ZIP file and Open in VS Code.

Step 6 . Now go to the main file and click on run button.

Step 7 . Now You will See The Output of emotion based music player in python .

Code Explanation:

This Python code is used to Create a Emotion Based Music Player in python. Ensures that You Have Downloaded the modules given above .

Importing Required Libraries:

import cv2

import threading

from deepface import DeepFace

import customtkinter as ctk

from PIL import Image, ImageTk

from youtubesearchpython import VideosSearch

import yt_dlp

import vlc

import time

import numpy as np

- OpenCV: It will Captures the real-time webcam video for face detection.

- DeepFace: It will Detects the emotions from your face using deep learning models.

- CustomTkinter: It will Creates a modern, stylish, dark-themed GUI — which is better than standard Tkinter.

- yt-dlp: This module will Streams the YouTube audio directly without downloading full videos.

- VLC Python Bindings: It will Plays the YouTube audio by using the VLC media backend.

- YouTube Search Python: It will Searches the YouTube videos based on your detected emotion (no API key needed).

- NumPy: It will Powers the animated visualizer by generating random waveform bar heights.

- Pillow (PIL): This Package will Converts and displays the webcam frames as images inside the GUI.

🧠 1. Real-Time Emotion Detection:

ret, frame = self.vid.read()

result = DeepFace.analyze(frame, actions=['emotion'], enforce_detection=False)

emotion = result[0]['dominant_emotion']

self.vid.read() : It captures a single frame from the webcam.

DeepFace.analyze(…) : It uses AI to analyze the face in the frame.

dominant_emotion : It will identifies the strongest emotion from the face.

This emotion is will later used to choose a matching song.

2. YouTube Music Search:

def search_youtube(self, query):

results = VideosSearch(query, limit=1).result()

video_url = results['result'][0]['link']

return video_url

This function in this code will uses the youtube-search-python package.

It searches on YouTube based on the emotion, e.g., “sad mood song”.

Then It will Returns the top video link this is emotion based music player in python.

3. Audio Streaming with yt-dlp & VLC:

def play_song(self, url):

with YoutubeDL({'format': 'bestaudio'}) as ydl:

info = ydl.extract_info(url, download=False)

stream_url = info['url']

self.player.set_mrl(stream_url)

self.player.play()

yt-dlp : It is used to extract direct audio URL from the YouTube video.

VLC : It will plays the audio stream without downloading the full video.

4. Animated Music Visualizer:

self.canvas.delete("all")

for i in range(30):

height = random.randint(10, 100)

x = i * 15

self.canvas.create_rectangle(x, 100 - height, x + 10, 100, fill="cyan")This Block of the code will Creates a live visualizer effect using random bar heights.

In this, Each rectangle simulates part of a waveform.

It is Visually represents the playing music in a modern style.

5. Futuristic UI with CustomTkinter:

self.label = ctk.CTkLabel(self.root, text="Emotion-Based Music Player 3000", font=("Orbitron", 20))

self.label.pack()It will Uses customtkinter for a dark-themed, modern UI.

Components like labels, canvas, and buttons are all styled with futuristic design by this.

Live updates will show current emotion and song title and it will make emotion based music player in python.

Source Code

import cv2

import threading

from deepface import DeepFace

import customtkinter as ctk

from PIL import Image, ImageTk

from youtubesearchpython import VideosSearch

import yt_dlp

import vlc

import time

import numpy as np

# --- App Setup ---

ctk.set_appearance_mode("dark")

ctk.set_default_color_theme("dark-blue")

app = ctk.CTk()

app.title("🎧 Emotion-Based Music Player 3000")

app.geometry("1100x800")

# --- Global Variables ---

emotion_var = ctk.StringVar(value="Detecting Emotion...")

song_var = ctk.StringVar(value="Initializing Music...")

last_emotion = ""

player = None

running = True

# --- Neon Background Frame ---

bg_frame = ctk.CTkFrame(app, fg_color="#0d0f1e", corner_radius=0)

bg_frame.place(relwidth=1, relheight=1)

# --- Main Futuristic Container ---

main_frame = ctk.CTkFrame(bg_frame, fg_color="#131729", corner_radius=25, border_width=3, border_color="#00ffff")

main_frame.place(relx=0.5, rely=0.5, anchor="center", width=1000, height=700)

# --- Webcam Video ---

video_frame = ctk.CTkFrame(main_frame, fg_color="#141c2f", border_width=2, border_color="#ff00ff", corner_radius=20)

video_frame.pack(pady=20)

video_label = ctk.CTkLabel(video_frame, text="")

video_label.pack()

# --- Neon Emotion Label ---

emotion_label = ctk.CTkLabel(main_frame, textvariable=emotion_var,

font=ctk.CTkFont("Arial", size=32, weight="bold"),

text_color="#00ffff")

emotion_label.pack(pady=(20, 5))

# --- Song Title Label ---

song_title_label = ctk.CTkLabel(main_frame, textvariable=song_var,

font=ctk.CTkFont("Arial", size=20, weight="bold"),

text_color="#ff00ff")

song_title_label.pack(pady=(0, 15))

# --- Music Visualizer ---

visualizer_frame = ctk.CTkFrame(main_frame, fg_color="#0f0f1f", corner_radius=20, border_color="#8e44ad", border_width=2)

visualizer_frame.pack(pady=10)

visualizer_canvas = ctk.CTkCanvas(visualizer_frame, width=700, height=120, bg="#0f0f1f", highlightthickness=0)

visualizer_canvas.pack(pady=10)

bars = []

bar_width = 12

gap = 4

num_bars = 45

for i in range(num_bars):

x = i * (bar_width + gap)

bar = visualizer_canvas.create_rectangle(x, 120, x + bar_width, 120, fill="#00ffff", outline="")

bars.append(bar)

# --- Visualizer Animation ---

def animate_visualizer():

if not running:

return

for bar in bars:

height = np.random.randint(25, 110)

visualizer_canvas.coords(bar, visualizer_canvas.coords(bar)[0], 120 - height,

visualizer_canvas.coords(bar)[2], 120)

visualizer_canvas.after(80, animate_visualizer)

# --- Glowing Emotion Animation ---

def pulse_emotion():

colors = ["#00ffff", "#ff00ff", "#8e44ad", "#00ffd5"]

idx = 0

def cycle():

nonlocal idx

emotion_label.configure(text_color=colors[idx % len(colors)])

idx += 1

if running:

emotion_label.after(400, cycle)

cycle()

# --- YouTube Stream ---

def search_youtube_video(emotion: str):

emotion_to_query = {

"Happy": "happy upbeat pop songs",

"Sad": "emotional hindi songs",

"Angry": "aggressive rap music",

"Neutral": "lofi chillhop mix",

"Surprise": "exciting edm tracks",

"Fear": "calming piano music",

"Disgust": "motivational workout music"

}

query = emotion_to_query.get(emotion, "relaxing ambient music")

search = VideosSearch(query, limit=1)

result = search.result()

url = result['result'][0]['link']

title = result['result'][0]['title']

return url, title

def stream_youtube_audio(url):

global player

if player:

player.stop()

ydl_opts = {

'format': 'bestaudio/best',

'quiet': True,

'noplaylist': True,

}

with yt_dlp.YoutubeDL(ydl_opts) as ydl:

info = ydl.extract_info(url, download=False)

audio_url = info['url']

instance = vlc.Instance()

player = instance.media_player_new()

media = instance.media_new(audio_url)

player.set_media(media)

player.play()

animate_visualizer()

# --- Emotion Detection Thread ---

def start_camera():

cap = cv2.VideoCapture(0)

def detect_emotion():

global last_emotion

while running:

ret, frame = cap.read()

if not ret:

continue

try:

result = DeepFace.analyze(frame, actions=['emotion'], enforce_detection=False)

emotion = result[0]['dominant_emotion'].capitalize()

emotion_var.set(f"Emotion: {emotion}")

if emotion != last_emotion:

last_emotion = emotion

url, title = search_youtube_video(emotion)

song_var.set(f"Now Playing: {title}")

stream_youtube_audio(url)

except:

emotion_var.set("Emotion: Unknown")

rgb = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)

img = Image.fromarray(rgb)

imgtk = ImageTk.PhotoImage(image=img)

video_label.imgtk = imgtk

video_label.configure(image=imgtk)

time.sleep(2)

threading.Thread(target=detect_emotion, daemon=True).start()

def update_frame():

if running:

ret, frame = cap.read()

if ret:

rgb = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)

img = Image.fromarray(rgb)

imgtk = ImageTk.PhotoImage(image=img)

video_label.imgtk = imgtk

video_label.configure(image=imgtk)

video_label.after(30, update_frame)

update_frame()

# --- Clean Exit ---

def on_closing():

global running

running = False

if player:

player.stop()

app.destroy()

app.protocol("WM_DELETE_WINDOW", on_closing)

# --- Start App ---

start_camera()

pulse_emotion()

app.mainloop()

Output: